Unit-3 (Short Answer)

a.

Define

Risks and explain type of risks

Ans:

Definition of Risks

In the context of software engineering, risks refer to uncertain events or conditions that, if they occur, can have a negative impact on a project's objectives, including its scope, schedule, cost, and quality. Understanding and managing risks is crucial in software development to minimize potential setbacks and ensure project success.

Types of Risks

There are various categories of risks in software development, and they can be generally classified into the following types:

- Technical Risks:

- Definition: Risks arising from the technology choices made during the project lifecycle.

- Examples:

- Unproven technology or tools could lead to difficulties in integration or unexpected challenges.

- Complexity in system design might lead to issues with performance and maintainability.

- Project Management Risks:

- Definition: Risks associated with the planning and execution of the project.

- Examples:

- Poor estimation of resource allocation may lead to budget overruns and project delays.

- Lack of clear project requirements can result in scope creep.

- Organizational Risks:

- Definition: Risks related to the structure, policies, and culture of the organization undertaking the project.

- Examples:

- Changes in management or shifts in company strategy may impact project priorities or support.

- Inconsistent support from stakeholders could lead to stalled development processes.

- External Risks:

- Definition: Risks that originate from outside the project or organization.

- Examples:

- Market changes or economic fluctuations could affect funding or product viability.

- Regulatory changes might impose new compliance requirements on the software being developed.

- Business Risks:

- Definition: Risks that affect the financial and overall strategic aspects of the business.

- Examples:

- Failure to meet market needs may result in a product that doesn't sell, leading to financial loss.

- Competition may arise unexpectedly, altering project objectives.

- Schedule Risks:

- Definition: Risks associated with the timing and delivery of project milestones.

- Examples:

- Delays in deliverables due to unforeseen technical challenges.

- Dependencies on external vendors might lead to bottlenecks.

- Resource Risks:

- Definition: Risks involving the availability and capability of personnel and other resources needed for the project.

- Examples:

- Key team members leaving the project unexpectedly can disrupt progress.

- Insufficient skilled labor might result in underperformance or quality issues.

- Quality Risks:

- Definition: Risks related to the quality of the software product being developed.

- Examples:

- Inadequate testing may allow critical bugs to go undetected until after deployment.

- Lack of adherence to coding standards could result in maintenance challenges.

b. Write short

note on Risk Management.

Ans:

Risk Management in Software Engineering

Risk Management is a systematic process aimed at identifying, analyzing, and responding to risks that may affect a project's outcomes. In software engineering, effective risk management is crucial for minimizing uncertainties and ensuring that projects are completed on time, within budget, and to the specified quality standards.

Key Components of Risk Management

- Risk Identification:

- The first step involves recognizing potential risks that could impact the project. This can be achieved through brainstorming sessions, expert interviews, and historical data analysis. Common tools include checklists and SWOT analysis (Strengths, Weaknesses, Opportunities, Threats).

- Risk Analysis:

- Once risks are identified, they must be analyzed to assess their likelihood of occurrence and potential impact. This analysis can be qualitative (prioritizing risks based on their severity) or quantitative (using numerical methods to estimate probabilities and impacts).

- Risk Prioritization:

- Risks are then prioritized based on their potential impact and likelihood. This helps in focusing on the most critical risks that require immediate attention.

- Risk Response Planning:

- Strategies are formulated to address identified risks. Common response strategies include:

- Avoidance: Altering the project plan to eliminate the risk.

- Mitigation: Implementing measures to reduce the impact or likelihood of the risk.

- Transfer: Shifting the risk to a third party, such as purchasing insurance or outsourcing.

- Acceptance: Acknowledging the risk and choosing to proceed without taking any action, often done for low-impact risks.

- Risk Monitoring and Control:

- This involves continuously tracking identified risks and monitoring new risks that may develop throughout the project lifecycle. Effective communication and reporting mechanisms are essential to ensure that all stakeholders are aware of risk status and any necessary actions.

Importance of Risk Management

- Enhances Decision-Making: By understanding potential risks, project managers can make informed decisions that align with project goals.

- Improves Project Outcomes: Proactive risk management helps avoid or mitigate potential problems, leading to fewer disruptions and better delivery of project objectives.

- Increases Stakeholder Confidence: A solid risk management plan demonstrates to stakeholders that the project team is prepared for uncertainties, fostering trust and support.

- Facilitates Resource Allocation: Identifying and prioritizing risks allows teams to allocate resources more effectively to high-risk areas, ensuring that critical aspects of the project are adequately supported.

c.

Explain

McCalls Quality factors.

Ans:

McCall's Quality Factors

McCall's Quality Factors, developed by Jim McCall in the 1970s, are a framework designed to understand and assess software quality. This model identifies specific attributes that contribute to the overall quality of a software product. McCall's model consists of three major categories of quality factors, which are further divided into sub-factors. The primary goal of these quality factors is to provide a comprehensive evaluation of software quality from different perspectives, such as the user's needs and the software's performance.

1. Product Operation Factors

These factors relate to how well the software performs its intended functions. They include:

-

Correctness: The extent to which the software meets its specifications and fulfills users' requirements without errors.

-

Reliability: The ability of the software to perform its required functions under stated conditions for a specified period. Reliability implies minimal failures and robust operation.

-

Efficiency: The degree to which the software optimally utilizes system resources, such as CPU time, memory space, and input/output operations, to perform its tasks.

-

Integrity: The assurance that the system is protected from unauthorized access and that data remains secure.

-

Usability: The ease with which users can learn to operate, prepare inputs for, and interpret outputs of the software system. This aspect focuses on user experience and interface design.

2. Product Revision Factors

These factors assess how easily the software can be modified to accommodate changes or enhancements. They include:

-

Maintainability: The ease with which the software can be adjusted to correct defects, improve performance, or adapt to a changed environment. High maintainability facilitates effective long-term upkeep.

-

Flexibility: The software's ability to accommodate changes without significant impacts on performance or structure. Flexible systems can easily adapt to new requirements.

-

Testability: The degree to which the software can be effectively tested to ensure that it functions as intended. High testability simplifies the identification and correction of bugs.

3. Product Transition Factors

These factors deal with how well the software can be transitioned from one environment or state to another, encompassing deployment and training. They include:

-

Portability: The ease with which the software can be transferred from one hardware or software environment to another. A portable system can be deployed in diverse settings without extensive modifications.

-

Reusability: The extent to which software components can be used in other applications or projects without significant changes. High reusability can lead to cost savings and faster development cycles.

-

Interoperability: The ability of the software to work with other systems or software. High interoperability ensures seamless integration with existing technologies and platforms.

d.

Write

short note on Verification and Validation.

Ans:

Verification and Validation in Software Engineering

Verification and Validation (V&V) are critical processes in software engineering that ensure the quality and functionality of a software product. Although often used interchangeably, they serve distinct purposes in the development lifecycle.

Verification

Verification is the process of evaluating the software at different development stages to ensure that it meets the specified requirements and design specifications. The goal of verification is to confirm that the software is being built correctly. It answers the question, "Are we building the product right?"

Key Points:

- It involves reviews, inspections, and testing of requirements and design documents.

- Verification techniques include static analysis, formal methods, and code inspections.

- Activities may include peer reviews, model reviews, and design walkthroughs.

- Verification is typically carried out at various stages of the software development lifecycle, including requirements gathering, design, and coding.

Validation

Validation, on the other hand, is the process of evaluating the software during or at the end of the development process to ensure that it meets the business needs and requirements of the stakeholders. This process is focused on confirming that the right product has been built. It answers the question, "Are we building the right product?"

Key Points:

- Validation activities usually involve executing the software and performing testing to ensure it behaves as expected in real-world scenarios.

- Techniques for validation include functional testing, system testing, acceptance testing, and usability testing.

- It aims to assess the end results by validating the output against the desired requirements and specifications.

Summary of Differences

-

Purpose:

-

Verification: Ensures compliance with specifications.

-

Validation: Ensures the product fulfills its intended use.

-

Focus:

-

Verification: Building the product right (correct processes).

-

Validation: Building the right product (correct outcomes).

-

Activities:

-

Verification: Reviews, inspections, static testing.

-

Validation: Testing, execution, user acceptance.

e.

Define

Software testing and state its principles.

Ans:

Software Testing

Software Testing is the process of evaluating and verifying that a software application or system meets specified requirements and is free of defects. It involves executing software/system components using manual or automated tools to evaluate one or more properties of interest. The primary goal of software testing is to ensure that the software functions as intended, is of high quality, and satisfies user needs.

Principles of Software Testing

There are several fundamental principles that guide software testing, which help ensure effective testing processes and deliver successful outcomes:

- Testing Shows the Presence of Defects:

- Testing can demonstrate that defects are present in the software but cannot prove that there are no defects. The goal is to identify as many defects as possible, though achieving 100% defect-free software is often impractical.

- Exhaustive Testing is Not Possible:

- Due to the vast combinations of inputs, environments, and system interactions, exhaustive testing (testing every possible input and path) is often not possible. Instead, testing should focus on the most critical and likely scenarios based on risk assessment.

- Early Testing:

- Testing should be performed as early as possible in the software development lifecycle (SDLC). Early testing helps identify defects sooner, reducing development costs and improving quality. It is preferable to catch defects during the requirements or design phases rather than during later stages.

- Defect Clustering:

- A small number of modules or components are often responsible for a large portion of the defects. This principle suggests that testing efforts should focus on areas of the software with a history of issues or complex functionality.

- Pesticide Paradox:

- Running the same set of tests repeatedly will not yield new information about potential defects. To improve testing effectiveness, testers need to continually create new test cases or modify existing ones to uncover new defects.

- Testing is Context-Dependent:

- The approach to testing should vary based on the type of application (e.g., web applications, mobile applications, safety-critical systems). Different contexts may require different testing strategies and techniques.

- Absence of Errors Fallacy:

- Just because the software is free from known defects does not mean it is ready for release. A product can be technically correct but still fail to meet user needs or business objectives. Validation through user acceptance testing is essential.

f.

Explain bug

life cycle with diagram.

Ans:

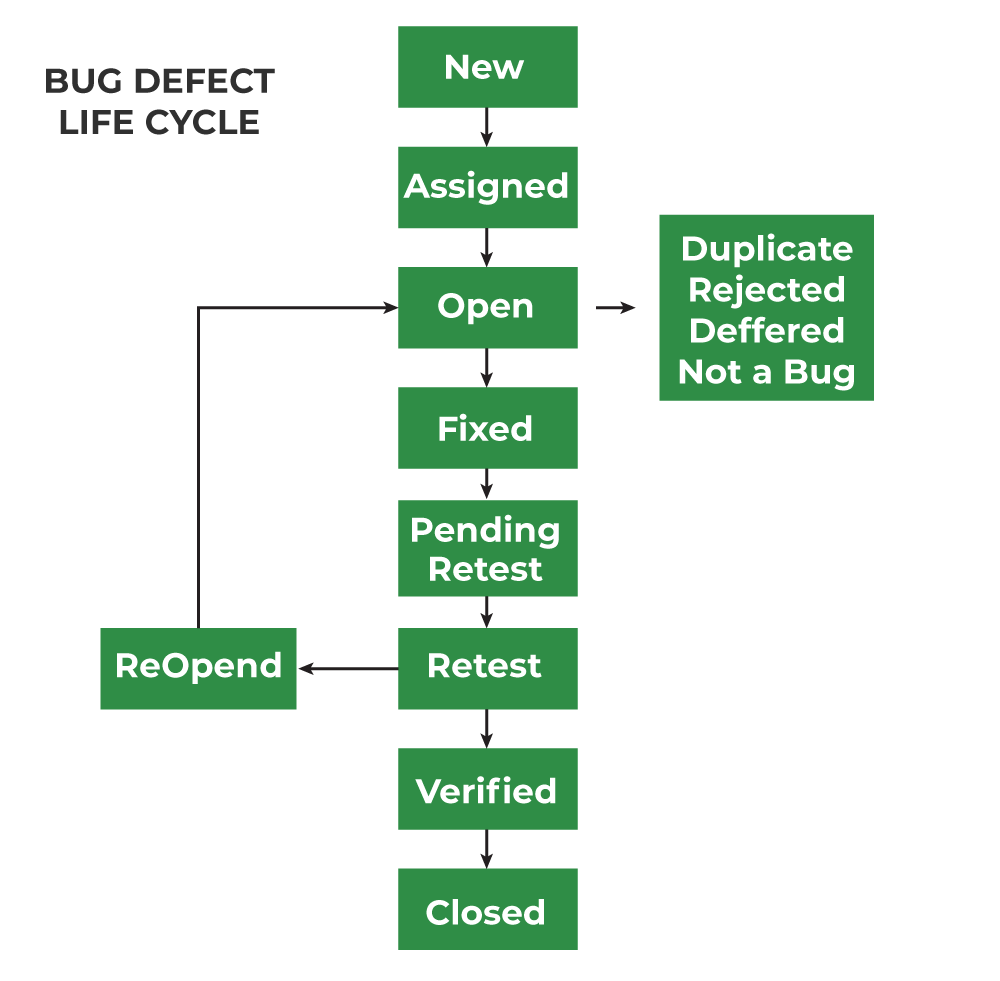

Bug Life Cycle - Explanation with Diagram

What is a Bug Life Cycle?

A Bug Life Cycle (also known as the Defect Life Cycle) is the process a software defect goes through from its discovery to its closure. It consists of various stages that a bug passes through during its lifetime in the software development process.

Stages in the Bug Life Cycle (as shown in the diagram)

-

New:

- A new defect is identified and logged.

- It is assigned a unique identification number.

- The details of the bug, such as description, module, severity, and steps to reproduce, are documented.

-

Assigned:

- The bug is assigned to a developer or a team member for further analysis.

- The developer reviews the defect and decides how to handle it.

-

Open:

- The developer acknowledges the bug and starts working on it.

- If the bug is valid, the developer proceeds to fix it.

- If the bug is found to be invalid, it may be marked as:

- Duplicate: The issue is already reported.

- Rejected: The defect is not valid or does not impact functionality.

- Deferred: The bug fix is postponed to a later release.

- Not a Bug: The reported issue is not actually a defect but rather expected behavior.

-

Fixed:

- The developer fixes the bug and updates the status.

- The fixed bug is then sent for retesting.

-

Pending Retest:

- The bug is marked as Pending Retest and assigned to the testing team.

-

Retest:

- The testing team verifies whether the bug has been successfully fixed.

- If the issue persists, the bug is reopened, and the cycle continues.

- If the bug is resolved, it moves to the next stage.

-

Verified:

- If the tester confirms the bug is successfully fixed and no longer exists, it is marked as Verified.

-

Closed:

- Once the bug is confirmed as fixed, the status is changed to Closed and the defect life cycle ends.

g.

Explain

Risks identification methods.

Ans:

Risk Identification Methods in Software Engineering

Risk identification is a crucial step in risk management within software engineering. It involves recognizing potential risks that could affect the success of a project. Various methods can be used to identify risks effectively. Below are some common risk identification methods:

- Brainstorming:

- This technique involves gathering team members and stakeholders to generate a list of potential risks. The open discussion encourages creativity and can uncover risks that might not have been considered individually. The focus should be on creating a comprehensive list without initially critiquing or filtering ideas.

- Checklists:

- Checklists compiled from previous projects, industry standards, or expert knowledge can be used to identify common risks. This method provides a systematic approach to ensure that no potential risk is overlooked. Checklists can be tailored for specific projects based on lessons learned from past experiences.

- Interviews and Surveys:

- Conducting interviews or surveys with stakeholders such as project managers, developers, users, and other team members can provide valuable insights into potential risks. This method captures diverse perspectives and experiences, helping to identify risks that may not be immediately apparent.

- Expert Judgment:

- Involving experts who have experience in similar projects can be beneficial for identifying risks. They can provide insights based on their understanding of potential challenges and pitfalls. Creating a panel of experts to assess risks can lead to a comprehensive identification process.

- SWOT Analysis:

- SWOT (Strengths, Weaknesses, Opportunities, Threats) analysis evaluates both internal and external factors that could pose risks. By examining weaknesses (internal) and threats (external), teams can better understand the risks associated with the project and its environment.

- Historical Data Analysis:

- Analyzing data from past projects can provide insights into common risks. By reviewing documentation such as post-mortem reports or risk logs, teams can identify patterns of risks that previously affected similar projects and apply that knowledge to current efforts.

- Root Cause Analysis:

- This method involves examining the underlying causes of past failures or issues. By understanding what led to previous problems, teams can identify potential risks that could arise in future projects. Techniques like the “5 Whys” can be useful for delving into root causes.

- Mind Mapping:

- Mind mapping is a visual technique that allows teams to organize thoughts and ideas related to potential risks. It helps identify connections and relationships between different risks and can lead to a more comprehensive understanding of the risk landscape.

- Scenario Analysis:

- This method involves considering various scenarios that could impact the project negatively. Team members create hypothetical situations regarding project scope changes, technology failures, or resource availability, assessing risks associated with these scenarios.

- Delphi Technique:

- The Delphi technique is a structured method of gathering expert opinions through multiple rounds of questioning. Experts provide views individually and anonymously, and a facilitator summarizes the feedback. This process continues for several rounds until a consensus is reached on potential risks.

h.

Explain

Inspection process with diagram.

Ans:

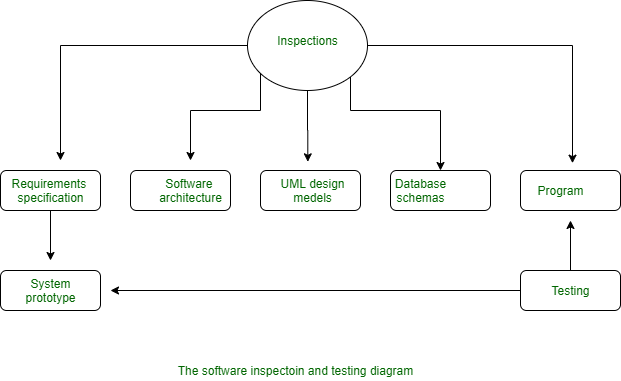

Software Inspection and Testing Diagram - Explanation

What is Software Inspection?

Software inspection is a systematic process of reviewing software artifacts, such as requirements, design documents, code, and test plans, to identify and fix defects early in the software development life cycle. The primary objective of software inspection is to ensure software quality, correctness, and compliance with the specified requirements before testing and deployment.

Explanation of the Diagram

The provided diagram represents the Software Inspection and Testing Process and its key components:

-

Inspections (Main Process)

- At the center of the diagram is "Inspections," which serves as the core process to ensure quality across different phases of software development.

- Inspection is performed at various stages to identify defects and improve software quality before testing.

-

Key Components Inspected:

- Requirements Specification:

- The process starts with reviewing the software requirements to ensure clarity, completeness, and feasibility.

- The requirements are analyzed for any inconsistencies or ambiguities.

- After approval, the requirements lead to the creation of a System Prototype.

- Requirements Specification:

-

Software Architecture

- The system architecture is reviewed to ensure that the high-level design meets functional and non-functional requirements.

- This stage ensures that the design aligns with the project goals and user needs.

-

UML Design Models

- Unified Modeling Language (UML) diagrams, such as Use Case Diagrams, Sequence Diagrams, and Class Diagrams, are inspected.

- The review process ensures that the system design follows best practices and meets the required functionality.

-

Database Schemas

- The structure of the database, including tables, relationships, and constraints, is examined for consistency and optimal performance.

- The schema must be well-structured to support data integrity and efficient querying.

-

Program (Code)

- The actual implementation of the software is inspected to ensure that it follows coding standards, best practices, and business logic.

- The program must be reviewed for potential errors, security vulnerabilities, and performance issues.

-

Testing

- After inspection and development, the software undergoes testing.

- The testing phase involves functional testing, unit testing, integration testing, and system testing.

- The primary goal is to identify and resolve defects before release.

-

System Prototype

- The system prototype is developed based on the Requirements Specification.

- This prototype helps in validating requirements before full-scale development.

-

Feedback Loop to System Prototype

- If issues are found during testing, they are fed back into the System Prototype, where corrections are made before the final release.

i. Differentiate

between Software Quality Assurance and Software Quality Control.

Ans:

j.

Write a short note on metrics for Software quality.

Ans

Metrics for Software Quality

Software quality metrics are quantitative measures used to evaluate the quality of a software product and the processes involved in its development. These metrics provide insights into various aspects of software quality and are essential for making informed decisions to improve software performance and reliability. Below are some common categories and examples of software quality metrics:

1. Product Quality Metrics

- Defect Density: This measures the number of defects relative to the size of the software product, usually expressed as defects per thousand lines of code (KLOC). A lower defect density indicates higher product quality.

- Reliability: Metrics such as Mean Time To Failure (MTTF) assess how often a software product fails during operation, providing insights into its reliability over time.

- Customer Satisfaction: This can be assessed through surveys or feedback mechanisms, indicating how well the product meets user needs and expectations.

2. Process Quality Metrics

- Defect Arrival Rate: This metric tracks the number of defects identified over a specified time frame, helping teams understand the quality of the software as it progresses through development.

- Process Compliance: Monitoring adherence to defined processes and standards can help identify areas for improvement. Metrics might include the percentage of processes followed correctly or the number of audits passed.

3. Performance Metrics

- Response Time: This measures how quickly a system responds to user queries, which is crucial for user experience. It can be measured under various load conditions to assess performance.

- Throughput: The amount of data processed by the system in a given time period, such as transactions per second, helps to evaluate the efficiency of the software.

4. Maintenance Metrics

- Mean Time To Repair (MTTR): This metrics assesses how quickly teams can resolve defects after they are reported. A lower MTTR indicates a more responsive and efficient maintenance process.

- Change Request Metrics: Tracking the number and types of change requests can provide insights into the maintainability and adaptability of the software.

5. Test Quality Metrics

- Test Coverage: This metric indicates the percentage of the codebase tested by automated or manual test cases. Higher test coverage suggests a more thorough testing process.

- Pass/Fail Rate: The ratio of passed tests to total tests executed provides a direct measure of software quality as validated by testing.

k.

Explain the

process of Risk Management.

Ans

Process of Risk Management

Risk management is a systematic approach to identifying, assessing, and mitigating risks that can adversely affect an organization’s ability to achieve its objectives. It is particularly crucial in software engineering, where project uncertainties can impact timelines, costs, and product quality. The risk management process typically consists of several key steps:

1. Risk Identification

- Objective: Recognize potential risks that could affect the project.

- Methods: Involves brainstorming sessions, expert interviews, checklists, and review of historical data from similar projects.

- Outcome: A comprehensive list of risks, which can be categorized into areas like technical risks, schedule risks, cost risks, performance risks, and external risks.

2. Risk Assessment

- Objective: Evaluate the identified risks to determine their potential impact and likelihood of occurrence.

- Techniques:

- Qualitative Assessment: Risks are prioritized based on their potential impact (e.g., low, medium, high) and the probability of occurrence (e.g., rare, possible, likely).

- Quantitative Assessment: This involves numerical analysis, such as calculating expected monetary value (EMV) or using statistical methods to understand the potential impact of risks more accurately.

- Outcome: A prioritized risk register that includes both qualitative and quantitative assessments.

3. Risk Response Planning

- Objective: Develop strategies to mitigate, transfer, accept, or avoid identified risks.

- Strategies:

- Mitigation: Implement actions to reduce the likelihood or impact of the risk (e.g., using robust testing procedures).

- Transfer: Shift the risk to another party (e.g., outsourcing a component or purchasing insurance).

- Acceptance: Acknowledge the risk without taking any action, often for minor risks where the cost of mitigation is higher than the risk itself.

- Avoidance: Alter project plans to eliminate the risk completely (e.g., changing technology to avoid technical risks).

- Outcome: A risk management plan detailing the actions to be taken for each identified risk.

4. Risk Monitoring and Control

- Objective: Continuously monitor risks and the effectiveness of risk response strategies throughout the project lifecycle.

- Activities:

- Regularly review the risk register and update it based on new information or changes in project conditions.

- Track identified risks and assess the effectiveness of mitigation strategies.

- Utilize Key Performance Indicators (KPIs) to assess and quantify risk management efforts.

- Outcome: An updated risk register and an understanding of the current risk landscape, which informs decision-making.

5. Communication and Reporting

- Objective: Ensure that relevant stakeholders are informed about risks and risk management efforts.

- Methods: Regular reporting of risk status to project stakeholders, including team members and management.

- Outcome: Enhanced awareness and understanding among stakeholders, leading to better collaboration on risk management.

l. Explain Test case design with examples.

Ans:

Test Case Design

Test case design is a crucial part of the software testing process, aimed at ensuring that a software application functions as intended and meets specified requirements. A well-designed test case not only identifies defects but also helps confirm that the software behaves correctly under various conditions. Here’s an overview of the test case design process along with examples.

What is a Test Case?

A test case is a document that specifies a set of conditions or variables under which a tester will determine whether a software application is working as expected. Each test case typically includes the following components:

- Test Case ID: A unique identifier for the test case.

- Description: A brief overview of what the test case is meant to verify.

- Preconditions: Any conditions that must be true before the test can be executed.

- Test Steps: A detailed sequence of actions to perform while testing.

- Expected Result: The anticipated outcome of executing the test steps.

- Actual Result: The actual outcome observed after executing the test steps (filled in during execution).

- Status: Pass/Fail result based on whether the expected result matches the actual result.

- Comments/Notes: Additional information, if necessary.

Types of Test Case Design Techniques

- Equivalence Partitioning: Divides input data into partitions where the expected behavior is the same, allowing for minimum test cases.

Example:

- For a function that accepts ages 0-120, test cases could cover:

- Valid: 20 (middle of range)

- Invalid Low: -5 (below 0)

- Invalid High: 150 (above 120)

- Boundary Value Analysis: Focuses on the values at the edges of equivalence classes.

Example:

- For the age function (valid range: 0-120):

- Valid: 0, 1, 119, 120

- Invalid Low: -1 (just below 0)

- Invalid High: 121 (just above 120)

- Decision Table Testing: Useful for capturing complex business rules with multiple inputs and outputs.

Example:

- For a discount system based on membership status (Member/Non-Member) and purchase amount (above/below $100): | Member | Purchase Amount | Discount | |--------|------------------|--------------| | Yes | >100 | 10% | | Yes | <=100 | 5% | | No | >100 | 0% | | No | <=100 | 0% |

- State Transition Testing: Evaluates the behavior of the application across different states.

Example:

- For an account lock mechanism:

- States: Unlocked, Locked

- Events: Enter Password (Correct/Incorrect)

- Transitions:

- Enter correct password -> Unlocked (from Locked)

- Enter incorrect password (3 times) -> Locked (from Unlocked)

- Use Case Testing: Derived from use cases to ensure all scenarios have been tested.

Example:

- For a login feature:

- Use Case: User logs into the application.

- Test Cases:

- Valid credentials (successful login)

- Invalid credentials (error message)

- Forgotten password (redirect to recovery page)

Example Test Case

Test Case ID: TC001 Description: Verify successful login with valid credentials. Preconditions: User has registered an account. Test Steps:

- Navigate to the login page.

- Enter the registered email address.

- Enter the correct password.

- Click the "Login" button.

m.

Explain CMMI Model.

Ans

CMMI Model

The Capability Maturity Model Integration (CMMI) is a process improvement framework used to develop and refine an organization's processes. It provides organizations with essential elements for effective process improvement, focusing on both product and service development. CMMI integrates concepts from various models and frameworks to create a comprehensive approach to process improvement.

Key Components of CMMI

- Maturity Levels: CMMI is structured into five maturity levels, each representing a different level of process improvement and capability.

-

Level 1: Initial Processes are unpredictable, poorly controlled, and reactive. Success depends on individual effort.

-

Level 2: Managed Processes are planned and executed in accordance with policy; they are tracked and controlled. Metrics are used to manage performance and improve predictability.

-

Level 3: Defined Processes are documented, standardized, and integrated into a coherent process for the organization. They are proactively managed and improved.

-

Level 4: Quantitatively Managed Processes are controlled using statistical and other quantitative techniques. This allows for a high degree of predictability and performance.

-

Level 5: Optimizing Focus is on continuous improvement of processes through incremental and innovative process and technology improvements.

- Process Areas: Each maturity level includes specific process areas that organizations must address to progress to the next level. Key process areas include:

- Project Management: Processes related to planning, monitoring, and controlling project work.

- Engineering: Technical activities including requirements development, design, and product integration.

- Support: Activities that support the other processes, such as training and quality assurance.

- Process Management: Management of the processes themselves to ensure they align with organizational goals.

- Specific and Generic Goals: Each process area contains specific and generic goals that an organization must achieve.

- Specific Goals: Focus on the specific objectives required for a process area.

- Generic Goals: Focus on organizational practices that support the achievement of specific goals and ensure their sustained capability.

- Implementation and Evaluation: CMMI provides guidelines for organizations to assess their current processes, identify gaps, plan improvements, and evaluate progress.

Benefits of CMMI

- Improved Quality: CMMI emphasizes quality in processes, leading to fewer defects and higher-quality products.

- Increased Efficiency: Streamlined processes reduce waste and enhance operational efficiency.

- Better Project Management: CMMI equips organizations with better project planning and management tools, resulting in predictable timelines and costs.

- Enhanced Customer Satisfaction: Consistent quality and timely delivery lead to higher levels of customer satisfaction.

- Competitive Advantage: Organizations that adopt CMMI are better equipped to meet market needs and maintain a competitive edge.

n.

Define SQA

and state its various elements.

Ans:

Software Quality Assurance (SQA)

Software Quality Assurance (SQA) refers to the systematic processes, activities, and methodologies used to ensure that the software development process adheres to predefined quality standards and requirements. SQA encompasses the entire software development lifecycle, focusing on improving and ensuring the quality of both the software product and the processes used to develop it.

Key Objectives of SQA:

- Ensure that the software meets the specified requirements and standards.

- Identify and resolve defects early in the development process.

- Enhance efficiency and effectiveness in software development.

- Foster continuous improvement of processes and practices.

Elements of SQA

SQA consists of several critical elements that contribute to the overall quality of the software products and the processes involved in their development. These elements include:

- Standards and Procedures:

- Definition of quality standards, policies, and procedures that guide the software development process. This ensures consistency and adherence to best practices.

- Process Definition:

- Establishment of defined processes for software development, maintenance, and testing. Clear processes help in managing quality throughout the software lifecycle.

- Quality Control (QC):

- Activities aimed at identifying defects in the developed software. QC includes testing and review processes to verify that the software works as intended.

- Quality Improvement:

- Continuous evaluation and refinement of processes to enhance software quality over time. This can involve adopting new methodologies or improving existing practices based on feedback and metrics.

- Audits and Reviews:

- Regular assessments and inspections of the software processes and products. These can include formal inspections, walkthroughs, and reviews to ensure compliance with quality standards.

- Metrics and Measurement:

- Implementation of metrics to measure software quality and process effectiveness. Metrics can include defect density, test coverage, and customer satisfaction ratings, providing data for informed decision-making.

- Training and Education:

- Continuous training for team members on quality assurance practices, tools, and methodologies. Well-trained personnel are essential for maintaining high-quality standards.

- Risk Management:

- Identification, assessment, and mitigation of risks that could impact software quality. This proactive approach helps to minimize potential issues before they arise.

- Management Commitment:

- Active involvement and support from management in promoting and enforcing quality standards and practices. Leadership commitment is crucial to fostering a quality-driven culture.

- Documentation:

- Detailed documentation of processes, standards, and quality requirements. Documentation helps ensure transparency, consistency, and knowledge sharing within the team.

Comments

Post a Comment