SY.Bsc.Cs Sem-3 Based on Mumbai Unversity

Principles of Operating Systems (Uint-1) Question Bank Answer:-

1. Explain operating system roles.

Solution:

Operating systems (OS) are crucial in managing computer resources and ensuring smooth operations. These roles include:

Process Management: The OS is responsible for managing processes (programs in execution). It handles process creation, scheduling, execution, and termination. It also manages multitasking, ensuring multiple processes can run concurrently by allocating CPU time to each process.

Memory Management: The OS manages the computer’s memory by allocating and deallocating memory space as needed by processes. It ensures efficient memory usage and prevents conflicts, such as one process overwriting another's data.

File System Management: The OS handles the storage, retrieval, and organization of files on storage devices. It provides a structure (file system) for how data is stored, ensuring data integrity and easy access through directories and files.

Device Management: The OS manages input/output (I/O) devices such as printers, keyboards, and storage drives. It serves as an intermediary between the hardware and software, ensuring smooth communication through drivers and interrupt handling.

Security and Access Control: The OS ensures system security by managing user access to system resources. It controls authentication, user permissions, and access rights, preventing unauthorized access to sensitive data and processes.

2. Explain the functions of the operating system.

Solution:

The operating system (OS) has several key functions that help manage a computer's resources and make it easier for users to interact with the machine. Here are the main functions:

Process Management: The OS handles the execution of programs. It creates, schedules, and terminates processes, ensuring that each program gets enough CPU time and runs smoothly without interference.

Memory Management: The OS controls how the computer's memory is used. It allocates memory to different programs and ensures that no program uses more memory than it should. It also helps in freeing up memory when it's no longer needed.

File System Management: The OS organizes and manages files and directories on storage devices (like hard drives). It provides a way to create, read, write, and delete files and keeps data stored securely and efficiently.

Device Management: The OS manages all input/output devices (like keyboards, printers, and storage devices). It ensures that these devices can communicate with the computer and works with device drivers to handle the hardware.

Security and Access Control: The OS provides security by controlling access to data and resources. It manages user accounts, permissions, and ensures that only authorized users can access sensitive information or make changes to the system.

User Interface: The OS provides a user interface, like graphical windows or command lines, so users can interact with the system easily. It translates user commands into actions the hardware can perform.

Error Detection and Handling: The OS monitors the system for errors, such as hardware failures or software bugs, and takes steps to handle them, ensuring the system remains stable and reliable.

3. What are the services provided by the operating system?

Solution:

Process Management:

- The OS handles the creation, scheduling, and termination of processes. It ensures efficient execution of processes, multitasking, and process synchronization, preventing deadlocks.

Memory Management:

- The OS manages the system's memory or RAM, allocating space to processes when needed and freeing it when no longer in use. This includes memory allocation, deallocation, and virtual memory management.

File System Management:

- It manages the organization, storage, retrieval, and manipulation of data on storage devices (e.g., hard drives, SSDs). The OS ensures proper file naming, file permissions, and directory structures.

Device Management:

- The OS controls hardware devices like printers, disks, and keyboards. It uses device drivers as intermediaries to communicate with hardware, handling input/output (I/O) operations, and ensuring efficient device utilization.

Security and Access Control:

- The OS provides security by enforcing user authentication, protecting against unauthorized access, and implementing permissions for files and system resources. It also protects against malware and security breaches.

4. What is a System call? Explain types of System calls.

Solution:

System Call:

A system call is a mechanism that allows user-level applications to request services from the operating system's kernel. When a program needs to perform a task that requires direct interaction with hardware or critical system resources, it uses system calls to switch from user mode to kernel mode. This ensures that the OS controls access to the hardware and maintains system security and stability.

Types of System Calls:

System calls can be classified into five main categories:

Process Control:

- These system calls manage the creation, execution, and termination of processes. They allow for loading and executing programs, managing process priorities, and handling process synchronization.

- Examples:

fork(), exec(), exit(), wait().

File Management:

- These system calls handle file-related operations such as creating, reading, writing, and deleting files. They also manage file permissions, directories, and the opening and closing of files.

- Examples:

open(), read(), write(), close(), unlink().

Device Management:

- These system calls allow interaction with hardware devices, such as reading from or writing to devices, requesting device status, or managing device drivers.

- Examples:

ioctl(), read(), write(), close().

Information Maintenance:

- These calls retrieve or modify system data, such as time, system statistics, and process status. They also manage system configurations.

- Examples:

getpid(), alarm(), sleep(), settimeofday().

Communication:

- These system calls manage communication between processes, both on the same machine and over networks. They provide mechanisms like message passing, signal handling, and socket communication.

- Examples:

pipe(), shmget(), msgget(), send(), recv().

5. Explain the operating system structure in detail.

Process Control:

- These system calls manage the creation, execution, and termination of processes. They allow for loading and executing programs, managing process priorities, and handling process synchronization.

- Examples:

fork(),exec(),exit(),wait().

File Management:

- These system calls handle file-related operations such as creating, reading, writing, and deleting files. They also manage file permissions, directories, and the opening and closing of files.

- Examples:

open(),read(),write(),close(),unlink().

Device Management:

- These system calls allow interaction with hardware devices, such as reading from or writing to devices, requesting device status, or managing device drivers.

- Examples:

ioctl(),read(),write(),close().

Information Maintenance:

- These calls retrieve or modify system data, such as time, system statistics, and process status. They also manage system configurations.

- Examples:

getpid(),alarm(),sleep(),settimeofday().

Communication:

- These system calls manage communication between processes, both on the same machine and over networks. They provide mechanisms like message passing, signal handling, and socket communication.

- Examples:

pipe(),shmget(),msgget(),send(),recv().

Solution:

6. Explain the Process state diagram.

Solution:

Process State Diagram:

The process state diagram illustrates the various stages a process undergoes during its lifecycle in an operating system. Processes transition between different states based on system conditions, user inputs, and resource availability. Understanding these states helps manage process execution, resource allocation, and scheduling efficiently.

Key Process States:

New:

- When a process is created, it enters the new state. The operating system performs initial setup tasks, such as loading the process control block (PCB) and assigning memory.

- Transition: The process moves to the ready state after initialization is complete.

Ready:

- In the ready state, the process is fully prepared to run but is waiting for CPU time. It is placed in the ready queue by the OS scheduler, waiting to be selected for execution.

- Transition: When the CPU is available, the process transitions to the running state.

Running:

- The process is actively executing instructions in the running state. Only one process per CPU core can be in this state at any given time.

- Transition:

- If the process completes execution, it moves to the terminated state.

- If an interrupt or I/O request occurs, the process is moved to the waiting state.

- If the time slice expires (in time-sharing systems), it transitions back to ready.

Waiting (Blocked):

- The process is in the waiting state when it cannot proceed until some event (such as an I/O operation or resource availability) occurs.

- Transition: Once the event occurs or the required resource becomes available, the process moves back to the ready state.

Terminated (Exit):

- In the terminated state, the process has finished its execution, either successfully or due to an error. The OS cleans up the resources used by the process.

- Transition: After cleanup, the process is removed from the system.

Process State Transitions:

Here is an overview of the possible transitions between these states:

- New → Ready: The process is created and ready to run.

- Ready → Running: The CPU scheduler selects the process for execution.

- Running → Terminated: The process completes its execution.

- Running → Waiting: The process requests I/O or some event that causes it to wait.

- Waiting → Ready: The waiting event is completed, and the process is ready to execute again.

- Running → Ready: In preemptive multitasking, the process is interrupted or its time slice expires, so it returns to the ready state.

7. Explain Process Scheduling in detail.

Solution:

Process Scheduling:

Process scheduling is a crucial function of an operating system that determines the order in which processes are executed by the CPU. It ensures efficient utilization of CPU time and improves system responsiveness, particularly in multitasking environments. Effective scheduling algorithms can greatly affect the performance and efficiency of a system.

Key Objectives of Process Scheduling:

Maximize CPU Utilization:

- Keep the CPU as busy as possible by minimizing idle time.

Maximize Throughput:

- Increase the number of processes completed in a given time frame.

Minimize Turnaround Time:

- Reduce the total time taken to execute a process from submission to completion.

Minimize Waiting Time:

- Decrease the time a process spends waiting in the ready queue.

Minimize Response Time:

- Ensure that users receive a prompt response, particularly important in interactive systems.

Types of Process Scheduling:

Long-term Scheduling:

- Determines which processes are admitted to the system for processing. It controls the degree of multiprogramming (the number of processes in memory). This scheduling is less frequent and happens when a process is created.

- Example: Job scheduling in batch processing systems.

Medium-term Scheduling:

- Temporarily removes processes from main memory and places them in secondary storage (swapping) to manage memory and reduce load. This is used in systems with virtual memory.

- Example: When a process in the waiting state has to be swapped out due to memory constraints.

Short-term Scheduling:

- Also known as CPU scheduling, it selects from among the processes in the ready queue and allocates CPU time. This decision is made frequently, often every few milliseconds.

- Example: Time-sharing systems that frequently switch between processes.

Scheduling Algorithms:

Several algorithms can be used for process scheduling, each with its advantages and disadvantages:

First-Come, First-Served (FCFS):

- Processes are scheduled in the order they arrive in the ready queue.

- Advantages: Simple and easy to implement.

- Disadvantages: Can lead to the "convoy effect," causing long waiting times for shorter processes.

Shortest Job Next (SJN):

- Selects the process with the shortest estimated run time next.

- Advantages: Minimizes average waiting time.

- Disadvantages: Difficult to predict the length of the next CPU burst, leading to potential starvation for longer processes.

Round Robin (RR):

- Each process is assigned a fixed time slice (quantum) in which it can execute. After its time slice, it is placed at the end of the ready queue.

- Advantages: Fairly distributes CPU time among processes, suitable for time-sharing systems.

- Disadvantages: Context switching overhead can reduce efficiency if the time slice is too small.

Priority Scheduling:

- Processes are scheduled based on priority levels. Higher priority processes are executed before lower priority ones.

- Advantages: Ensures critical tasks are executed first.

- Disadvantages: Can lead to starvation of low-priority processes.

Multilevel Queue Scheduling:

- Processes are divided into different queues based on their priority or type (e.g., system processes, interactive processes, batch processes). Each queue can have its own scheduling algorithm.

- Advantages: Flexible and efficient for various types of processes.

- Disadvantages: Complexity in managing different queues.

8. Explain the process control block(PCB).

Solution:

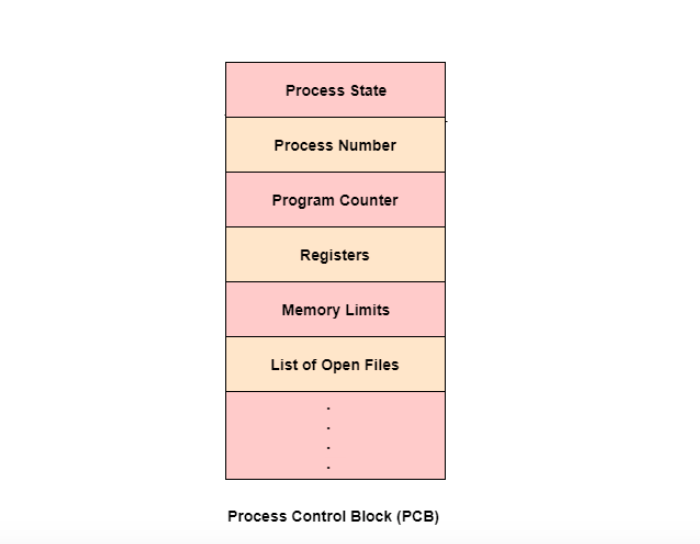

A Process Control Block (PCB) is a crucial data structure used by the operating system to manage information about a specific process. It contains all the necessary details needed to keep track of a process's execution state, resources, and other critical data. The PCB acts as a repository for process-related information and is vital for process scheduling and management.

Key Components of a PCB:

Process Identification Information:

- Process ID (PID): A unique identifier assigned to each process.

- Parent Process ID: The identifier of the process that created this process.

- Process State: Indicates the current state of the process (e.g., New, Ready, Running, Waiting, Terminated).

Process Control Information:

- Program Counter (PC): Holds the address of the next instruction to be executed.

- CPU Registers: Includes the values of various CPU registers that need to be saved during a context switch.

- Scheduling Information: Information regarding the process's priority, scheduling queue pointers, and any time-slice information for algorithms like Round Robin.

Memory Management Information:

- Memory Allocation: Details about the memory allocated to the process, including the base and limit registers (or page tables in virtual memory systems).

- Segment Information: In segmented systems, information about segments (code, data, stack) allocated to the process.

I/O Status Information:

- I/O Devices: Lists the I/O devices currently allocated to the process and their status (e.g., waiting for input/output).

- File Descriptors: Information regarding open files associated with the process, including file handles and access permissions.

Accounting Information:

- CPU Usage: Total CPU time consumed by the process.

- Process Start Time: The time when the process was created.

- Resource Limits: Any limits set on resource usage (e.g., maximum memory or CPU time).

9. Explain short-term and long-term schedulers with a diagram.

Solution:

Short-Term Scheduler (CPU Scheduler):

Function:

- The short-term scheduler, also known as the CPU scheduler, selects which process from the ready queue will be allocated CPU time next. This decision is made frequently, often in milliseconds, and is responsible for maximizing CPU utilization and system responsiveness.

Characteristics:

- Frequency: Operates on a very short time scale, usually every few milliseconds.

- Responsiveness: Affects the responsiveness of the system, particularly in interactive environments.

- Context Switching: Involves context switching, where the state of the current process is saved, and the state of the next process is loaded.

Example Algorithms:

- First-Come, First-Served (FCFS)

- Shortest Job Next (SJN)

- Round Robin (RR)

- Priority Scheduling

Long-Term Scheduler (Job Scheduler):

Function:

- The long-term scheduler, or job scheduler, determines which processes are admitted to the system for processing. It controls the degree of multiprogramming, which is the number of processes in memory.

Characteristics:

- Frequency: Operates on a longer time scale, typically when new processes are created.

- Degree of Multiprogramming: Affects how many processes can reside in the ready queue and how many processes can run concurrently.

- Job Admission: Decides whether to load a process into memory based on system load and resource availability.

Example:

- Batch processing systems where jobs are queued for processing based on their arrival times and resource requirements.

10. Explain process memory.

Solution:

Logical vs. Physical Addressing:

- Logical Address: The address generated by the CPU during program execution.

- Physical Address: The actual address in main memory. The operating system uses a memory management unit (MMU) to map logical addresses to physical addresses.

Virtual Memory:

- Modern operating systems use virtual memory to extend the apparent memory available to processes beyond the physical memory. This allows multiple processes to run concurrently and efficiently manage memory usage.

- Swapping: The OS can move inactive processes to disk storage to free up RAM, allowing for more efficient memory management.

Segmentation and Paging:

- Segmentation: Divides memory into variable-sized segments based on logical divisions (like functions or data).

- Paging: Divides memory into fixed-size blocks (pages) that can be easily mapped and managed. This helps reduce fragmentation and allows non-contiguous memory allocation.

11. What is thread? Explain in detail about it.

Solution:

Key Concepts of Threads:

Definition:

- A thread is a sequence of programmed instructions that can be managed independently by a scheduler. Multiple threads can exist within a single process, sharing the same memory space and resources.

Components of a Thread:

- Thread ID: A unique identifier for each thread.

- Program Counter (PC): Keeps track of the next instruction to execute.

- Registers: Stores the thread's state and data.

- Stack: Contains local variables and function call information specific to the thread.

- Thread State: Indicates whether the thread is ready, running, waiting, or terminated.

Characteristics of Threads:

Lightweight:

- Threads are more lightweight than processes, as they share the same address space and resources. Creating and managing threads typically requires less overhead compared to full process management.

Shared Resources:

- Threads within the same process share code, data, and resources, which allows for efficient communication and data sharing. However, this also introduces challenges in synchronization and data consistency.

Inter-thread Communication:

- Threads can communicate with one another more easily than processes due to their shared memory space. However, this necessitates careful management to avoid issues such as race conditions.

Types of Threads:

User Threads:

- Managed at the user level without kernel intervention. The user-level threading library provides APIs to create and manage threads.

- Advantages: Faster context switching and no kernel overhead.

- Disadvantages: The OS does not recognize these threads, making it unable to schedule them efficiently.

Kernel Threads:

- Managed by the operating system kernel. The OS is aware of the threads and can schedule them independently.

- Advantages: Better support for multiprocessor systems and system-level thread management.

- Disadvantages: Higher overhead due to context switching and kernel management.

Hybrid Threads:

- Combines both user-level and kernel-level threading, providing benefits of both models. The user-level threads are mapped to kernel threads, allowing flexibility and efficiency.

Advantages of Threads:

Responsiveness:

- Threads can improve application responsiveness, as multiple tasks can be processed simultaneously, allowing for better user interaction.

Resource Sharing:

- Threads share memory and resources, reducing the overhead associated with process creation and communication.

Parallelism:

- On multi-core processors, threads can run in parallel, effectively utilizing CPU resources and enhancing performance.

Challenges of Using Threads:

Synchronization:

- Managing concurrent access to shared resources requires synchronization mechanisms (e.g., mutexes, semaphores) to prevent data inconsistencies and race conditions.

Deadlocks:

- Threads may end up in a deadlock situation where two or more threads are waiting for each other to release resources, leading to a standstill.

Complex Debugging:

- Debugging multithreaded applications can be more challenging due to the non-deterministic nature of thread execution.

12. Differentiate between process and thread.

Solution:

13. Explain the computing environment in the operating system.

Solution:

Key Components of the Computing Environment:

Hardware:

- The physical components of the computer system, including the CPU, memory (RAM), storage devices (HDD/SSD), input/output devices, and network interfaces. The OS interacts directly with hardware to manage resources and execute tasks.

Operating System:

- The OS acts as an intermediary between the hardware and user applications. It manages hardware resources, provides an interface for user interaction, and facilitates process management, memory management, and file system operations.

User Interface:

- The means through which users interact with the computer system. It can be:

- Graphical User Interface (GUI): Offers visual elements like windows, icons, and menus for user interaction (e.g., Windows, macOS).

- Command-Line Interface (CLI): Provides a text-based interface for users to issue commands directly (e.g., Linux shell).

- The means through which users interact with the computer system. It can be:

Application Software:

- Programs that run on top of the operating system and perform specific tasks for users, such as word processors, web browsers, and database management systems. Applications rely on the OS for access to hardware resources.

System Services:

- The OS provides various services to manage processes, memory, files, and input/output operations. These include:

- Process Management: Creation, scheduling, and termination of processes.

- Memory Management: Allocation and deallocation of memory, including virtual memory.

- File System Management: Organizing, storing, and retrieving files on storage devices.

- Device Management: Controlling and interfacing with hardware devices.

- The OS provides various services to manage processes, memory, files, and input/output operations. These include:

Network Environment:

- Many modern operating systems support networking capabilities, enabling communication and resource sharing across multiple devices. This includes support for protocols (like TCP/IP), network security, and remote access.

Characteristics of the Computing Environment:

Multitasking:

- The ability of the OS to execute multiple processes or threads concurrently, improving resource utilization and system responsiveness.

Concurrency:

- The OS manages concurrent execution of processes and threads, allowing for efficient scheduling and resource sharing among them.

Resource Management:

- The OS allocates resources such as CPU time, memory, and I/O devices among competing processes to ensure fair and efficient utilization.

Isolation and Protection:

- The OS provides isolation between processes to prevent interference and maintain security, ensuring that processes cannot access each other’s memory space without permission.

Scalability:

- The ability of the computing environment to adapt to increasing workloads, either by upgrading hardware or by optimizing software.

14. Explain in detail about multithreading models.

Solution:

Multithreading models define how threads are managed and how they interact with processes and operating system resources. These models impact performance, resource utilization, and the complexity of thread management in applications. Here are the primary multithreading models:

1. Many-to-One Model

Description:

- In the many-to-one model, multiple user-level threads are mapped to a single kernel thread. The thread library manages the scheduling and execution of threads at the user level.

Characteristics:

- User-Level Management: The operating system is not aware of individual user threads; it only sees the single kernel thread.

- Thread Switching: Fast context switching since it occurs in user space without kernel intervention.

- Blocking: If one thread makes a blocking system call, the entire process is blocked, as the kernel is not aware of the other threads.

Advantages:

- Lightweight and efficient in terms of context switching.

- Easy to implement and manage at the user level.

Disadvantages:

- Limited concurrency; if one thread blocks, others cannot run.

- No benefit from multiprocessor systems since all threads are handled by a single kernel thread.

2. One-to-One Model

Description:

- In the one-to-one model, each user thread is mapped to a corresponding kernel thread. The operating system can schedule each thread independently, allowing for better concurrency.

Characteristics:

- Kernel-Level Management: The OS is aware of all user threads, allowing it to manage and schedule them.

- Concurrency: Multiple threads can run simultaneously on multiple processors or cores.

- Blocking: If one thread blocks, it does not affect the execution of other threads.

Advantages:

- Better performance on multiprocessor systems due to independent scheduling.

- Improved responsiveness since one blocking thread does not halt the entire process.

Disadvantages:

- Higher overhead due to the kernel managing multiple threads.

- Slower context switching compared to the many-to-one model.

3. Many-to-Many Model

Description:

- In the many-to-many model, multiple user-level threads are mapped to multiple kernel threads. This model allows for flexible management of threads and can adapt to varying workloads.

Characteristics:

- Dynamic Mapping: The system can create a sufficient number of kernel threads to handle the user threads.

- Scalability: It can efficiently utilize multiple processors and adapt to system workload.

- Blocking: If one thread blocks, other threads can continue to execute.

Advantages:

- Combines the benefits of both many-to-one and one-to-one models, providing both concurrency and efficiency.

- Optimal resource utilization on multiprocessor systems.

Disadvantages:

- Increased complexity in implementation and management.

- Requires a sophisticated scheduling mechanism to manage the mapping of user threads to kernel threads.

4. Hybrid Model

Description:

- The hybrid model combines aspects of the many-to-one and one-to-one models, allowing flexibility in how threads are scheduled and managed. This model is often implemented by combining user-level threading libraries with kernel-level support.

Characteristics:

- Flexibility: Allows applications to use both user-level and kernel-level threads as needed.

- Adaptability: Can dynamically adjust the number of kernel threads based on application requirements.

Advantages:

- Balances the benefits of user-level and kernel-level thread management.

- Efficient resource utilization while maintaining responsiveness.

Disadvantages:

- Increased complexity in managing threads and their states.

- Potential for increased overhead if not optimized properly.

15. Explain inter-process communication.

Solution:

Key Concepts of IPC:

Purpose of IPC:

- Enables data exchange between processes.

- Facilitates synchronization and coordination of processes to prevent data inconsistency and conflicts.

- Supports resource sharing in a controlled manner.

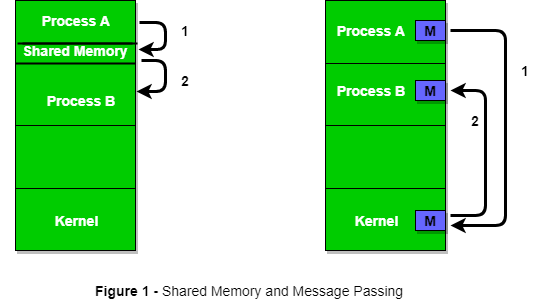

Types of IPC Mechanisms: IPC can be broadly categorized into two types: message-based and shared memory.

A. Message-Based IPC

Description:

- Processes communicate by sending and receiving messages through a communication channel or messaging system.

Examples:

- Pipes: A unidirectional communication channel allowing one process to write data that another process reads.

- Message Queues: A queue where messages can be sent by one process and retrieved by another in a first-in, first-out (FIFO) manner.

- Sockets: Used for communication over a network, allowing processes on different machines to communicate.

Advantages:

- Simple and effective for loosely coupled processes.

- Provides a clear separation between sender and receiver, enhancing modularity.

Disadvantages:

- Can have higher overhead due to context switching and data copying.

- Communication can be slower compared to shared memory.

B. Shared Memory

Description:

- Multiple processes access a common memory area to read and write data directly.

Examples:

- Shared Memory Segments: A designated area of memory that is mapped into the address space of multiple processes.

Advantages:

- Fast communication, as processes can directly read from and write to the shared memory.

- Efficient for large amounts of data since there is no need for data copying.

Disadvantages:

- Requires careful synchronization (e.g., using semaphores or mutexes) to prevent data corruption and ensure consistency.

- More complex to implement due to the need for synchronization mechanisms.

3. Synchronization Mechanisms

- To ensure data consistency and prevent race conditions, IPC often employs synchronization techniques such as:

- Mutexes: Locking mechanisms to ensure exclusive access to shared resources.

- Semaphores: Signaling mechanisms that allow processes to synchronize based on resource availability.

- Condition Variables: Used for blocking processes until a particular condition is met.

16. Explain single-thread and multi-thread concepts in detail.

Solution:

1. Single-Thread Concept

Definition:

- A single-threaded application uses one thread to execute tasks sequentially. It can handle one task at a time, processing each operation before moving on to the next.

Characteristics:

- Sequential Execution: All tasks are executed in a linear order, with each task waiting for the previous one to complete.

- Simplicity: Easier to implement and manage since there are no concerns about concurrent execution, race conditions, or synchronization.

- Blocking Operations: If a task requires waiting (e.g., for I/O operations), the entire application becomes unresponsive during that wait time.

Advantages:

- Ease of Development: Programming models are straightforward, as developers don't need to manage concurrency.

- Debugging: Simpler debugging process since there’s only one execution flow to trace.

Disadvantages:

- Performance Limitations: Can lead to inefficient resource utilization, especially on multi-core systems where only one core is utilized.

- Responsiveness Issues: User interfaces can become unresponsive during long-running tasks, negatively affecting user experience.

Example:

- A basic command-line application that processes input sequentially without any parallel execution.

2. Multi-Thread Concept

Definition:

- A multi-threaded application uses multiple threads within a single process to execute tasks concurrently. Each thread can perform a separate task or work together to complete a larger task.

Characteristics:

- Concurrent Execution: Multiple threads can run simultaneously, enabling tasks to be processed in parallel.

- Shared Resources: Threads within the same process share the same memory space and resources, allowing for efficient communication but requiring careful synchronization.

- Responsiveness: While one thread is waiting (e.g., for I/O), others can continue executing, keeping the application responsive.

Advantages:

- Performance Improvement: Better utilization of CPU resources, especially on multi-core processors, where threads can run in parallel.

- Improved Responsiveness: Applications can handle multiple tasks simultaneously, enhancing user experience in interactive applications.

- Resource Sharing: Threads can communicate easily through shared memory, which can be faster than inter-process communication methods.

Disadvantages:

- Complexity: Increased complexity in programming and design, requiring careful management of thread creation, synchronization, and termination.

- Synchronization Issues: Risks of race conditions and deadlocks, requiring mechanisms like mutexes, semaphores, or condition variables to manage access to shared resources.

- Debugging Challenges: More difficult to debug due to non-deterministic behavior resulting from concurrent execution.

Example:

- A web server that handles multiple client requests simultaneously, where each request is processed in a separate thread, allowing for efficient handling of numerous connections.

17. Explain operations on processes.

Solution:

Operations on Processes

In an operating system, processes are fundamental units of execution. Various operations are performed on processes to manage their lifecycle, scheduling, and communication. Here’s a detailed explanation of the key operations on processes:

1. Process Creation

Definition: The operation that generates a new process. This typically occurs when a process requests to execute another program.

Methods:

- Forking: In UNIX-like systems, the

fork()system call creates a new process (child process) that is a duplicate of the calling process (parent process). The child process receives a unique process ID. - Exec: After creating a new process with

fork(), theexec()family of functions can replace the process's memory space with a new program. - Process Control Block (PCB): The OS creates a PCB for the new process, which contains information like process ID, state, priority, and resources.

- Forking: In UNIX-like systems, the

2. Process Termination

Definition: The operation that ends a process's execution. This can occur voluntarily (the process completes its task) or involuntarily (due to errors or external commands).

Methods:

- Exit System Call: A process can terminate itself by invoking the

exit()system call, which informs the OS that the process has finished executing. - Kill Command: The OS can terminate a process using commands like

kill, which sends a signal to terminate the process. - Cleanup: Upon termination, the OS reclaims resources held by the process, such as memory and file descriptors.

- Exit System Call: A process can terminate itself by invoking the

3. Process Scheduling

Definition: The operation that decides which processes are to be executed by the CPU at any given time. This involves selecting from among the ready processes.

Methods:

- Scheduling Algorithms: Various algorithms are used to determine the order of process execution, including:

- First-Come, First-Served (FCFS): Processes are scheduled in the order they arrive.

- Shortest Job Next (SJN): The process with the shortest execution time is scheduled next.

- Round Robin (RR): Each process is given a fixed time slice, and processes are scheduled in a circular manner.

- Context Switching: When the CPU switches from executing one process to another, a context switch occurs, which involves saving the state of the current process and loading the state of the next process.

- Scheduling Algorithms: Various algorithms are used to determine the order of process execution, including:

4. Process Synchronization

Definition: The operation that ensures processes cooperate correctly when accessing shared resources, preventing race conditions and ensuring data consistency.

Methods:

- Locks and Mutexes: Mechanisms that allow only one process to access a resource at a time.

- Semaphores: Signaling mechanisms that can be used to control access to shared resources based on conditions.

- Condition Variables: Used to block a process until a certain condition is met, allowing for more fine-grained control over synchronization.

5. Process Communication

Definition: The operation that allows processes to communicate and exchange data, which is crucial for collaboration in concurrent systems.

Methods:

- Inter-Process Communication (IPC) Mechanisms: Various methods for communication between processes include:

- Pipes: Allow unidirectional data flow from one process to another.

- Message Queues: Enable processes to send and receive messages asynchronously.

- Shared Memory: Allows multiple processes to access a common memory area for fast data sharing.

- Sockets: Used for communication between processes running on different machines.

- Inter-Process Communication (IPC) Mechanisms: Various methods for communication between processes include:

18. Describe the command line Interface and GUI with an example

Solution:

Command Line Interface (CLI) and Graphical User Interface (GUI) are two primary ways users interact with operating systems and applications. Each interface has its unique characteristics, advantages, and use cases.

1. Command Line Interface (CLI)

Definition:

- A CLI allows users to interact with the operating system or applications through text-based commands. Users type commands into a console or terminal window to perform specific tasks.

Characteristics:

- Text-Based Interaction: Users input commands in textual form, often requiring knowledge of command syntax and options.

- Minimal Resource Usage: CLI applications typically consume fewer system resources compared to GUI applications.

- Powerful and Flexible: Advanced users can execute complex commands, automate tasks with scripts, and perform batch operations efficiently.

Advantages:

- Speed: Experienced users can execute commands faster than navigating through menus in a GUI.

- Scripting Capabilities: Users can create scripts to automate repetitive tasks.

- Remote Access: CLI is often preferred for remote server management over SSH, as it allows control without a graphical display.

Disadvantages:

- Steeper Learning Curve: New users may find it intimidating and challenging to remember commands and syntax.

- Limited Visual Feedback: Lacks visual elements like icons, making it harder to visualize operations.

2. Graphical User Interface (GUI)

Definition:

- A GUI provides a visual way for users to interact with the operating system or applications through graphical elements such as windows, icons, buttons, and menus.

Characteristics:

- Visual Interaction: Users interact with the system using visual elements, making it more intuitive and user-friendly.

- Point-and-Click Functionality: Users can use a mouse or touch gestures to select and manipulate graphical objects.

- Multimedia Elements: GUIs can incorporate images, videos, and animations for richer interactions.

Advantages:

- Ease of Use: Intuitive design makes it accessible for users with varying levels of technical expertise.

- Visual Feedback: Provides immediate visual responses to user actions, helping users understand the consequences of their interactions.

- Multitasking: Users can easily switch between applications and windows.

Disadvantages:

- Resource Intensive: GUIs typically require more system resources (CPU, memory, and graphical capabilities).

- Slower for Advanced Users: Users accustomed to CLI may find GUIs slower for executing complex commands or operations.

Example:

- In a Windows operating system, a user might navigate to their documents folder by clicking on the "File Explorer" icon, then double-clicking the "Documents" folder to view its contents visually. They could also right-click a file to access options like "Open," "Delete," or "Rename" from a context menu.

(3 Marks questions)

1. Draw the structure of OS.

Solution:

2. Give any 3 examples of different types of operating systems.

Solution:

3. Explain two common communication models.

Solution:

Message Passing Model: Processes communicate by sending and receiving messages. This model is suitable for distributed systems and supports asynchronous communication.

Shared Memory Model: Processes communicate by accessing a common memory space. This model allows for fast communication but requires synchronization mechanisms to manage concurrent access.

4. What is meant by context switch? Explain.

Solution:

Definition: A context switch is the process of saving the state of a currently running process and loading the state of the next scheduled process. This allows multiple processes to share the CPU efficiently.

Explanation:

- During a context switch, the operating system saves the current process's registers, program counter, and other relevant data in its Process Control Block (PCB).

- The OS then loads the saved state of the next process from its PCB and restores its registers and program counter, allowing it to resume execution.

- Context switching introduces overhead but is essential for multitasking environments.

5. Explain the benefits of multithreaded programming .

Solution:

6. Difference between single-thread and multi-thread

Solution:

7. What is thread? Write benefits of Thread

Solution:

A thread is the smallest unit of processing that can be scheduled by an operating system. Threads exist within processes and share the same memory space.

Benefits:

- Efficiency: Threads are lightweight, with lower overhead compared to processes, allowing for faster context switching.

- Shared Memory: Threads within a process can easily share data and communicate through shared memory.

- Parallelism: Threads can run in parallel on multiple cores, improving performance for multi-core systems.

8. Explain how timers work.

Solution:

Timers are hardware or software mechanisms that generate periodic interrupts to enable the operating system to manage time-related functions.

Explanation:

- Timer Interrupts: A timer generates interrupts at set intervals, allowing the OS to preempt running processes and perform scheduling or other time-sensitive tasks.

- Scheduling: Timers enable time-sharing by allowing the OS to allocate CPU time to different processes, ensuring fairness and responsiveness.

- Real-Time Operations: In real-time systems, timers are crucial for maintaining deadlines and managing tasks that must occur at specific intervals.

- ( Next unit-2 👉)

Comments

Post a Comment